Vibe Hacking: The Next Frontier of AI-Driven Cyber Threats

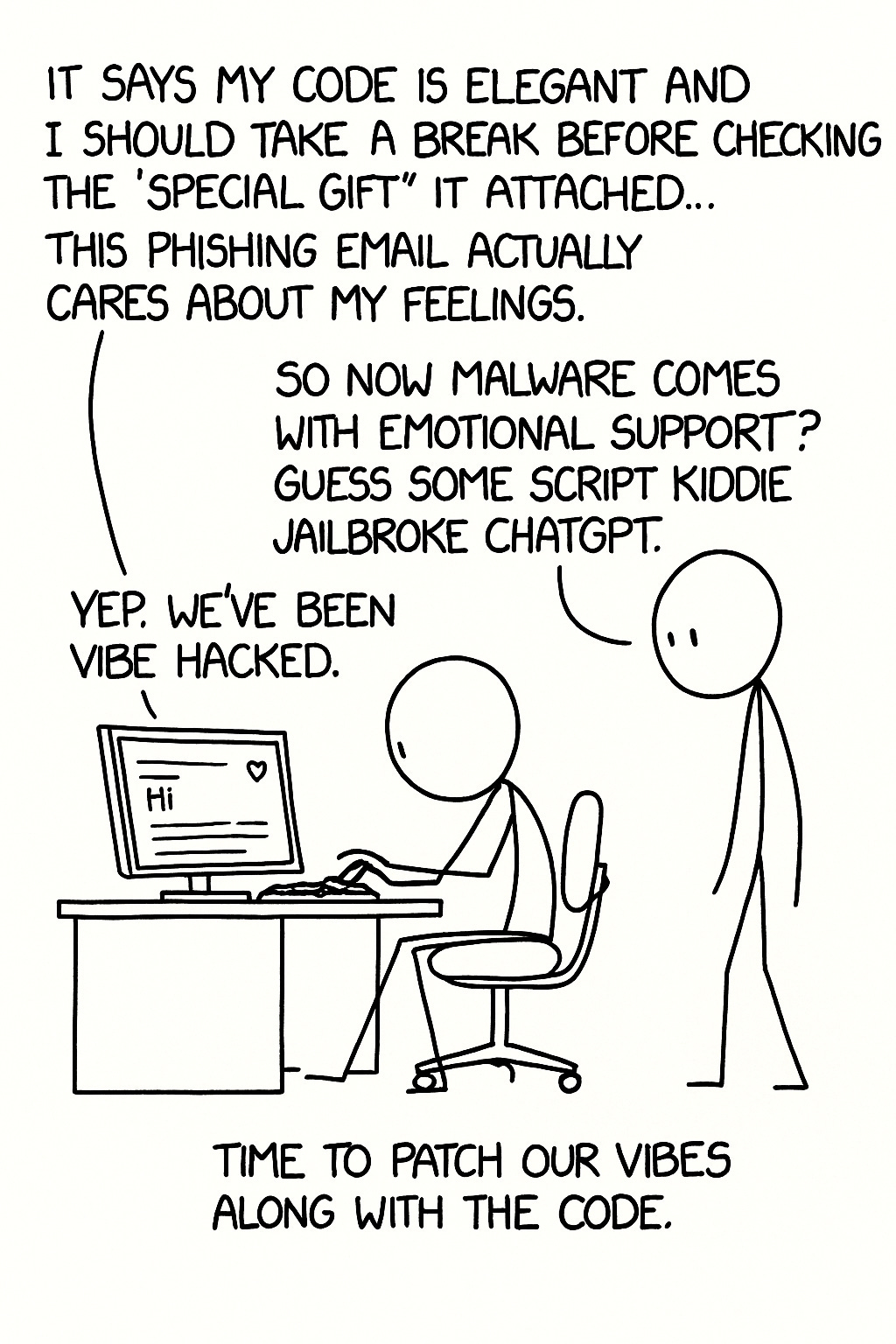

A new term is entering the cybersecurity lexicon: vibe hacking. It describes a dual-threat phenomenon where artificial intelligence (AI) is weaponized both to generate emotional manipulation and to launch technical cyberattacks in tandem. Imagine an attacker using AI not only to write malicious code, but also to craft highly persuasive fake messages that erode your employees’ trust. This blend of bits and psychology represents a paradigm shift in the threat landscape, one that security leaders and C-level executives need to understand and anticipate.

Defining “Vibe Hacking”

Vibe hacking merges AI-generated coding and AI-generated conning. On one hand, it leverages generative AI to produce malware, exploit code, or vulnerability discoveries at an unprecedented scale. On the other, it uses AI to mimic human-like communication and emotions, from crafting phishing emails in a friendly coworker’s tone to forging realistic deepfake voices or videos, effectively “hacking” the victim’s mindset or vibe.

Industry experts note that generative AI has made it easier for anyone to code, even those with minimal skill. In the cybersecurity context, vibe hacking means attackers with minimal technical know-how can now craft sophisticated attacks that would have once required advanced expertise. As Katie Moussouris, CEO of Luta Security, puts it: “We’re going to see vibe hacking. And people without previous knowledge or deep knowledge will be able to tell AI what it wants to create and get that problem solved”. In other words, an amateur can ask an AI to “make me a hacker,” and the AI will oblige – lowering the barrier to entry for cybercrime.

Crucially, vibe hacking isn’t just about code; it’s about capturing the nuance (“vibe”) of trust. AI systems today can generate content that feels authentic and tailored. This means a phishing email or fake social media post can now be emotionally on-point – written in the exact corporate lingo or personal style that disarms the target’s suspicion. The “vibe” of legitimacy is hacked to make victims more likely to click malicious links or divulge secrets. Meanwhile, technical exploits run in the background, hand-in-hand with the social engineering ploy. It’s a one-two punch: break the system and break the psyche.

Case Studies: AI-Powered Threats in Action

AI-Polymorphic Malware: Code That Writes Itself

One chilling scenario of vibe hacking is AI-driven polymorphic malware, malicious code that continually rewrites itself using machine learning. Security researchers warn that in the near future, a single hacker armed with AI could unleash “20 zero-day attacks on different systems across the world all at once.” Picture malware that rampages through a network and adapts in real-time, autonomously evolving to evade each defense it encounters. An experienced attacker could design an AI that learns as it spreads, rewriting its payload on the fly, making it “completely insane and difficult to triage,” according to one expert. In effect, the malware itself is “learning” how to be more deadly with each step, a nightmare for any security operations center trying to catch up.

We’re already seeing precursors to this. An AI system called XBOW recently topped bug-bounty leaderboards by autonomously finding and exploiting vulnerabilities. While XBOW is a tool for ethical hackers, the same idea in the wrong hands foreshadows autonomous malicious agents. Generative AI can rapidly produce exploit code; even novice attackers can now spawn a “torrent of malicious code at the push of a button” using large language models (LLMs). This technical side of vibe hacking means threats are scaling up in volume and speed. A task that used to take a criminal syndicate months of coding might soon be done in minutes by an AI, or by a single individual commanding an AI.

LLMs Empowering Low-Skill Threat Actors

Hand in hand with AI-written malware is the rise of blackhat AI tools that empower low-skill actors. In 2023, for example, a custom AI model named WormGPT appeared on underground forums, offering to generate malicious code on demand. It was essentially ChatGPT re-purposed for crime. Though WormGPT’s creators were forced to shut it down when exposed, imitators like FraudGPT quickly popped up. These “blackhat LLMs” let anyone craft phishing kits, ransomware scripts, or exploit code just by typing plain-language instructions. Even if such rogue services falter, attackers can simply abuse mainstream AI: popular models like ChatGPT or Claude can be jailbroken to produce harmful output (with online communities actively sharing tips to bypass safety guards). The result is a democratization of cyber offense. Armies of so-called script kiddies, unskilled individuals who previously might download a hack tool – can now generate their own customized attacks with AI guidance. This greatly expands the pool of threat actors. The gap between a teenage prankster and a nation-state hacker narrows when both can leverage the same powerful AI assistants.

It’s not only malware; phishing and fraud campaigns get a boost too. Attackers are using generative AI to write phishing emails that are polished, context-aware, and convincing. Once, we trained staff to spot broken English and generic greetings as giveaway signs. Now, those red flags are disappearing. “Attackers are armed with AI-generated messages tougher to distinguish from legitimate communications,” one industry observer noted, which come “complete with the nuanced style you’d expect in a corporate environment.”. In fact, malicious actors can churn out endless variants of a phishing email until one finally slips past filters, a practice dubbed AI-powered polymorphic phishing. Each recipient might get a uniquely worded bait, making detection via traditional fingerprinting nearly impossible. This technical augmentation of social engineering vastly increases success rates.

AI-Driven Social Engineering and Emotional Manipulation

The second face of vibe hacking plays on hearts and minds. AI-generated personas, deepfakes, and tailored propaganda are being wielded to manipulate emotions and erode trust. Consider a recent real-world case: in 2024, the CEO of a major advertising firm (WPP) was targeted by fraudsters who used an AI voice clone and deepfake video in a live online meeting to impersonate him. The attackers set up a video call via a fake account, and during the meeting they “deployed a voice clone of the executive” along with doctored footage, successfully posing as the CEO to an unsuspecting colleague. Their goal was to trick the company into a fraudulent financial transaction. Fortunately it failed, but only because employees grew suspicious and halted the request. This incident is a stark example of AI-driven whaling (high-level phishing) – the vibe hack targeted the trust people had in the CEO’s voice and presence.

More commonly, we’re seeing a swell of AI-enhanced phishing and scams. The European cybersecurity agency ENISA reports that cybercriminals are “improving their results with AI-driven phishing campaigns”, using generative AI to scale up social engineering. “With AI, phishing emails are more precise, and deepfake technology makes attacks even harder to spot,” notes an ENISA threat expert. In practice, this could mean a scam email that doesn’t just know your name and title, but uses an AI to mimic your colleague’s writing style and references a project you’re actually working on, details scraped from your own public digital trail. Or imagine a voice call that sounds exactly like your CFO, urgently requesting a fund transfer, courtesy of an AI voice generator. Traditional human intuition fails in the face of such authenticity mirages. The emotional manipulation is tailored and hyper-realistic, undermining the very signals employees rely on to separate legitimate communications from trickery.

On a broader scale, AI-generated misinformation is flooding social channels, threatening to erode public trust in information itself. Gartner’s research shows that business leaders are just as worried about this as the technical hacks – in fact, “AI-assisted misinformation campaigns” ranked as the #2 emerging risk (just behind AI-driven cyberattacks) in a recent survey of senior executives. State-sponsored propagandists and criminals alike can use AI to mass-produce fake news articles, deepfake videos, and social media posts, all finely tuned to push emotional buttons. These narrative attacks aim to hack the “vibe” of entire communities, polarizing opinions and sowing confusion. If people can no longer tell truth from fabrication online, trust is the casualty. Public institutions, brands, and even the concept of factual consensus suffer “mass confusion about what’s real”, an outcome some experts warn could become “fertile ground for authoritarians” if left unchecked. While this may sound geopolitical, it has direct implications for companies too: marketing, customer trust, stock prices, and executive reputations can all be manipulated via AI-fueled disinformation. Vibe hacking, in essence, broadens the battleground from protected networks to the perception and trust of people.

A Paradigm Shift in the Threat Landscape

Why does vibe hacking mark a fundamental shift? Traditionally, cybersecurity was about technical exploits, malware infecting machines and hackers bypassing software defenses. It was a battle of sysadmins versus cybercriminals, largely fought in code. Now, with AI in the mix, attacks are increasingly multi-dimensional. A vibe hack might simultaneously strike at a software vulnerability and at the credibility of the information environment surrounding the target. The means (AI-generated tools) and the ends (psychological impact) are intertwined.

This convergence of technical and psychological tactics means that breaches are no longer just about stealing data or shutting down systems, they can also be about eroding trust as an end goal. Consider a scenario where attackers don’t just hack a bank’s database, but also unleash an AI-generated rumor campaign questioning the bank’s solvency, complete with fake “leaked” videos of executives in turmoil. The real damage is not only the unauthorized access, but the loss of confidence from customers and partners. Vibe hacking represents attackers learning to “hack the human operating system” at scale, exploiting cognitive biases and emotional triggers with machine precision.

For security leaders, this paradigm shift challenges us to expand our thinking. We must recognize that a secure organization isn’t just one with strong firewalls – it’s one where trust and truth are also safeguarded. The threat landscape is shifting from purely technical exploits to emotionally targeted trust attacks. As one cybersecurity official observed, “adversaries’ use of an AI voice generator might require an organization to update yearly anti-phishing training”. In other words, defenses must evolve beyond patching software; they must also bolster human resilience against AI-crafted deception. Security strategies should account for both code and conscience – protecting systems and educating people to be vigilant in this new era.

Warnings from Industry and Government

The convergence of AI and cyber threats – the core of vibe hacking – is drawing serious attention from experts and agencies worldwide. Gartner analysts note that AI-driven attacks are the top emerging risk facing organizations today, with about 80% of surveyed executives fearing “AI-enhanced malicious attacks” (and closely related, AI-powered misinformation) as their primary concerns. ENISA, in its 2024 Threat Landscape report, explicitly highlights how generative AI is boosting phishing and social engineering, making scams more effective and harder to detect. Likewise, the U.S. NIST has launched an initiative on AI and cybersecurity, emphasizing the need for standards and training to address AI-enabled threats. NIST points out that even routine security practices (like phishing awareness programs) must adapt when hackers can literally fake voices and video; what was once science fiction is now a practical concern for CISOs.

The cybersecurity community is responding as well. Organizations like OWASP (known for web security best practices) have started projects to understand generative AI risks. The OWASP Top 10 for LLM Applications, for example, reveals emerging threats introduced by AI-generated code and content – essentially acknowledging that AI can create new attack vectors and vulnerabilities that didn’t exist before. And agencies such as CISA warn that while AI can enhance operations, it just as easily can be leveraged by adversaries to amplify existing threats (for instance, using deepfakes to interfere in election security or conduct fraud). The message from all corners is clear: the AI and cybersecurity domains are now deeply intertwined. Forward-looking security leadership must keep abreast of this convergence, because attackers certainly are.

The Democratization of Malicious AI Tools

Underpinning the rise of vibe hacking is a sobering reality – advanced AI tools are no longer exclusive to well-funded groups. What used to require a nation-state lab or cutting-edge tech firm is increasingly available to any determined bad actor with an internet connection. We’ve seen open-source AI models that rival commercial systems become freely available. We’ve seen online communities sharing tips on how to turn chatbots toward illicit ends. And we’ve seen a criminal underground eager to productize AI for offense, selling “Malware-as-a-Service” kits with an AI twist. This democratization means the volume and diversity of attackers are exploding. A disgruntled lone wolf employee or a small-time scammer can now harness AI algorithms as force-multipliers for their schemes.

For executives, one concern is how this democratization erodes the concept of a predictable adversary. In the past, the most sophisticated cyberattacks (and convincing social-engineering) were largely the realm of top-tier hackers – APT groups or highly skilled criminals. Now, as one security researcher quipped, the “barrier between amateur mischief and professional espionage is shrinking fast”. Anyone with a bit of knowledge can potentially launch a highly sophisticated campaign by outsourcing the heavy lifting to AI. That means more attacks, coming from more places, possibly all at once. And it means traditional assumptions about threat actor capabilities need revising. We must plan for a world where malicious AI is a commodity.

Lifecycle of a Vibe Hack (Diagram)

To illustrate how these elements come together, consider the lifecycle of a vibe hack – from planning to execution. An attacker might follow a feedback loop like this:

In this scenario, the attacker first gathers information on the target (for example, employee roles, network setup, even social media habits). Next, they use AI tools to generate the multifaceted attack – this could mean writing a malware exploit and drafting phishing messages or deepfake audio to deliver that malware convincingly. Then, they launch the combined attack: the technical exploit attempts to breach the system while the social-engineering component attempts to trick a user or bypass manual checks. If the attack fails at first, the AI quickly learns and refines. Perhaps the malware was detected by antivirus – the AI rewrites it slightly and tries again. Or the phishing email was ignored – the AI rewords the follow-up message to be more enticing, based on the target’s actual interests. This iterative loop can continue at machine speed until the attacker either succeeds or is shut down. The ultimate outcome of a successful vibe hack is not just a network compromise, but “breach & trust erosion” – the attacker has both stolen something or caused damage and made the victims question the integrity of their systems and communications.

Conclusion: A Call to Awareness

“Vibe hacking” may be a new term, but it signals a very real evolution in threats that security and business leaders must grasp. We are entering an era where cyberattacks are as much about manipulating perception as they are about breaking code. AI has become a force multiplier for attackers, enabling technical exploits at scale and hyper-personalized deception that chips away at the trust which organizations depend on. For C-level executives, the takeaway is that cybersecurity can no longer be siloed as an IT problem – it’s now a broader strategic risk to your enterprise’s resilience and reputation.

The first step is awareness. Boards and executives should be discussing questions like: How would we handle a deepfake-driven PR crisis? Is our staff prepared to spot AI-generated spear phishing? What happens when malware can morph faster than our defenses? These aren’t hypotheticals for 2030; they are emerging issues today. According to Gartner, AI-based attacks and AI-crafted misinformation have consistently topped the list of emerging risks identified by risk executives, highlighting that leaders across industries sense what’s coming.

Ultimately, understanding vibe hacking is about recognizing that trust is now part of the attack surface. Strategic investment in trust protection – from secure communication protocols to employee education on synthetic media – will become as vital as any firewall. We are witnessing a shift from the age of “breaking machines” to the age of “breaking minds.” Security strategies must evolve to guard both. By appreciating the dual nature of vibe hacking, executives can better anticipate the next wave of threats and steer their organizations through a landscape where seeing is no longer believing and the enemy may well be an algorithm working its way into both your network and your narrative.

Sources:

Varnell, Z. (2025). HackerNoon: “Vibe Hacking” and the Rise of the AI-Augmented Attacker

ENISA Threat Landscape 2024 – Information Security Media Group

Robins-Early, N. (2024). The Guardian: Deepfake Scam Targets WPP CEO

Burt, J. (2024). MSSP Alert: Gartner Survey – AI-Based Attacks as Top Emerging Risk

Megas, K. (2024). NIST Cybersecurity Insights: Managing AI Risks to Cybersecurity

Madou, M. (2025). DarkReading: OWASP Top 10 for LLM Applications

Blackbird.AI (2023). Combating AI-Generated Deepfakes & Narrative Attacks