Secure by Design: Lessons from the MoD for DevSecOps Developers

In the UK Ministry of Defence’s recent “Secure by Design Problem Book” (2025), one theme rings loud and clear: build security into systems from the ground up and keep it there for life. While the MoD’s context is military capability, its lessons resonate for enterprise and open-source software teams alike. In today’s high-stakes landscape, where 76% of applications have at least one security flaw (and 24% have a high-severity vulnerability), developers must rethink how we design, build, and maintain software. This blog post distills key takeaways from the MoD’s guidance into practical insights for developers, touching on DevSecOps culture, secure development practices, and the role of AI. We’ll also highlight how modern tools (like GitHub’s security suite) can help make “secure by design” a reality. Let’s dive in!

One Team, One Mission: Security Is Everyone’s Job

The MoD emphasizes a “one team” approach to security, and this is a mindset every development organization should embrace. Secure software isn’t just the security team’s responsibility; it’s a collaboration between developers, operations, security engineers, and even management. Breaking down silos is critical. When developers, QA, and security work together from the start, security becomes baked into the product, not bolted on later.

This cultural shift requires up-skilling and awareness across roles. The MoD assumes personnel are “suitably qualified and experienced” in Secure by Design, but recognizes the need to grow those skills through training and knowledge sharing. In industry, we face a well-documented skills gap – the cybersecurity workforce has 3.4 million unfilled positions globally. We can’t hire our way out of every problem; instead, we must empower existing teams. Many organizations institute “Security Champions” programs or require developers to undergo secure coding training (covering OWASP Top 10, threat modeling, etc.). Such initiatives help build a baseline of security competency so that issues are caught early by the people writing the code.

Just as importantly, teams need the right tools to support a security-first culture. Modern DevSecOps platforms make it easier for devs to do the right thing. For example, GitHub Advanced Security (GHAS) can automatically scan code for vulnerabilities and bad patterns on every push, giving developers immediate feedback and education on security bugs. This integrates security checks into daily development, reinforcing skills through real code examples. GitHub Codespaces can provide consistent, pre-hardened development environments for all team members, so everyone starts with secure configurations and up-to-date dependencies – a boon for onboarding new developers and avoiding the “works on my machine” pitfalls. And when it comes to coding assistance, AI tools like GitHub Copilot can boost productivity for junior and senior devs alike, but it’s still crucial that developers learn to interpret and verify these AI-generated suggestions (more on that later). In short, fostering a one-team security culture means aligning people, process, and technology so that building securely is the path of least resistance.

Bridging the Knowledge Gap and Securing the Supply Chain

Another theme from the MoD’s problem book is the challenge of unevenly distributed information and knowledge. In an ideal world, engineers freely share security insights and data. In reality, organizations often grapple with silos, secrecy, or simply the difficulty of getting the right information to the right people. The MoD notes that not all barriers to sharing can be solved by culture alone – sometimes policies or external constraints (like classified intel or proprietary IP) limit what can be shared. In industry, we see parallels in supply chain security and inter-team collaboration. How do you ensure everyone has the necessary info to build securely without exposing sensitive data?

Firstly, encourage transparency and communication within the bounds of safety. Development, security, and ops teams should regularly review threat models, incident post-mortems, and best practices together. For example, if the security team learns of a new critical vulnerability or attack trend, they should alert developers and update requirements or libraries proactively. Conversely, developers should flag risky design decisions or third-party components early so they can be assessed. Techniques like internal “brown bag” sessions, security guilds, or cross-team war games can spread knowledge of both problems and solutions.

Secondly, manage your software supply chain vigilantly. Modern applications pull in countless open-source libraries and third-party services, each an upstream knowledge source that could harbor weaknesses. A key secure-by-design practice is to inventory and track these dependencies (often via an SBOM – Software Bill of Materials) and keep them updated. The importance of this was exemplified by the infamous Equifax breach: attackers exploited a known Apache Struts library flaw that had a patch available two months prior. Equifax’s failure to promptly disseminate and act on that knowledge led to a massive breach of 147 million records. The lesson is clear: when a critical vuln is announced, assume adversaries will move fast – you need the processes (and tools) in place to respond even faster.

Automation can help here. GitHub’s Dependabot alerts and update pull requests are a great example of bridging the gap between “knowing” and “doing.” Dependabot monitors your dependency graph and notifies you (or even opens a PR) when a library you use has a known vulnerability. In fact, developers are increasingly trusting such automation – in 2023, 60% more vulnerability-related Dependabot PRs were merged than in the previous year. Embracing these tools means critical information (like a new CVE in a library) is distributed quickly to those who can fix it, without relying on each developer to read security advisories manually.

We also must account for cases where information sharing needs to be private. The MoD discusses how sharing certain threat evidence with suppliers could tip off adversaries; in the software world, disclosing a vulnerability publicly before a fix can invite attackers. That’s why coordinated vulnerability disclosure is vital. If you maintain an open-source project, enabling private channels for researchers to report issues is a secure-by-design must. GitHub’s Private Vulnerability Reporting feature (launched in 2022) addresses this by letting researchers alert maintainers through a private form in the repo. This ensures that knowledge of a security flaw reaches those who can act on it, without unnecessary public exposure. By using such mechanisms (and having a clear security.md policy in your repo), open-source developers signal that security issues will be handled responsibly – turning a potential knowledge asymmetry into a collaborative fix.

Finally, securing the supply chain also means protecting sensitive data like credentials across organizational boundaries. A stark example: in 2016, Uber suffered a breach after developers inadvertently exposed AWS keys in code on a private GitHub repo. Hackers found those credentials, accessed Uber’s cloud and stole data on 57 million users. The practice of hard-coding secrets or leaving them in config files can undermine even a well-designed system. Secret management (using vaults or cloud key managers) and secret scanning in code repos are essential habits. GitHub Advanced Security’s secret scanning can catch accidentally committed API keys or passwords within minutes, so you can invalidate them before attackers do. The Uber case highlights that sharing knowledge unintentionally (through leaked secrets) can be just as dangerous as not sharing what should be shared. Secure-by-design teams treat secrets with care and use automation to police their code for any such exposures.

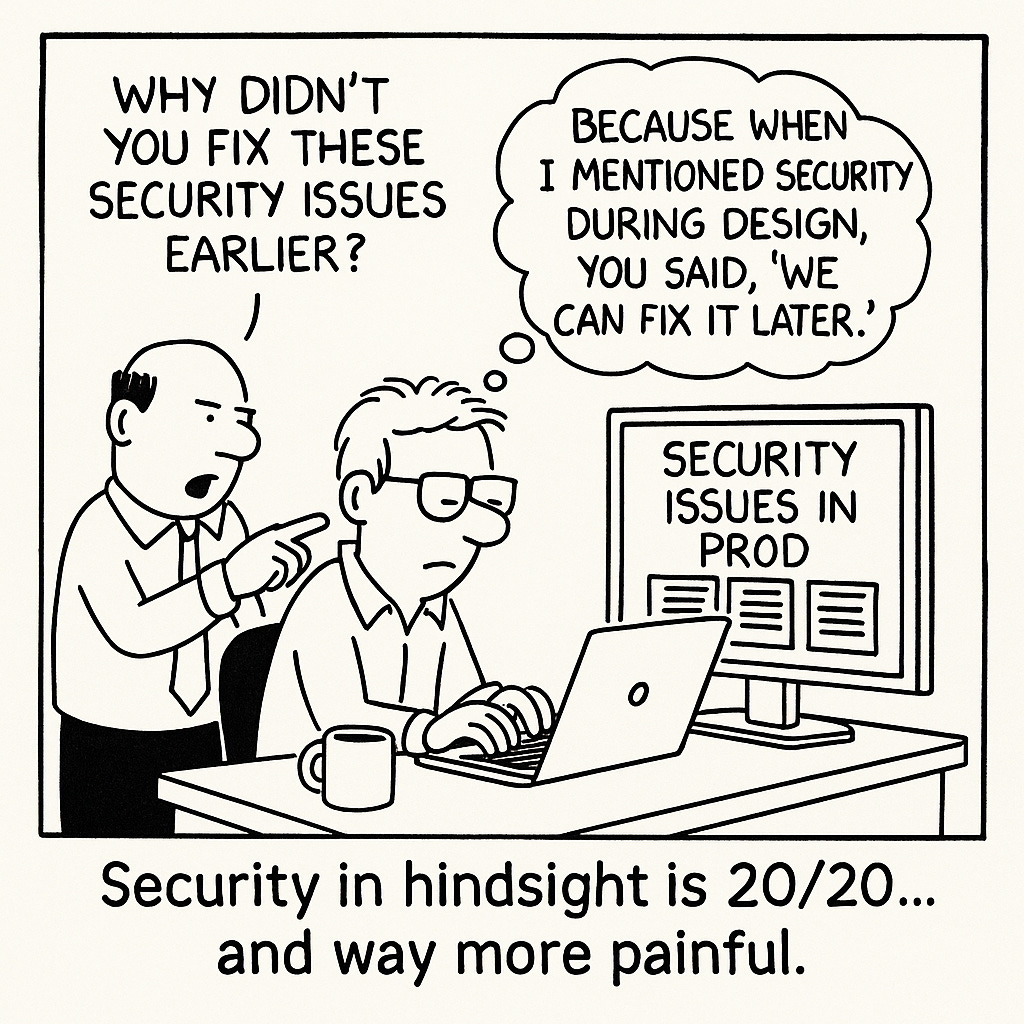

Shift Left: Build Security In from Day One

“Secure by Design” fundamentally means considering security at the earliest stages, not as an afterthought. The MoD highlights that while a project is still just an idea on paper, that’s the time to start weaving in security requirements. It’s far cheaper and more effective to get the design right than to retrofit security later. In fact, IBM research shows that fixing a vulnerability in production can cost 30 times more than fixing it during design. And more than cost, late fixes might simply be too late, by the time a weakness is discovered in the field, a breach may have already occurred.

What does “shifting left” look like in practice for developers? It starts with requirements and threat modeling. As features are being planned, define the security outcomes alongside the functional ones. For example, if you’re building a fintech app feature to upload documents, a functional requirement is “support PDF and image uploads,” and a parallel security requirement might be “validate file types and scan for malware.” Conducting threat modeling or risk brainstorming in early design meetings can illuminate these needs. The MoD notes that at early stages, different experts (security, safety, human factors) will each be contributing analyses – the goal is to make security part of that initial analysis, so it’s considered alongside usability, performance, and other factors. If you identify high-value assets and potential threats up front, you can design the architecture to mitigate them from the get-go (for instance, choosing an encryption strategy, or segregating an especially sensitive microservice).

Crucially, avoid the “bolt-on security” trap. The MoD puts it plainly: bolting security on later increases costs and leaves systems more open to attack. We’ve all seen projects where looming deadlines tempt teams to postpone security fixes (“we’ll handle authN later,” or “let’s just expose this API now and add rate limiting after beta”). Such deferrals often come back to bite. A classic industry example was the initial design of certain APIs without brute-force protection or using insecure default credentials – leading to incidents where attackers easily guessed passwords or keys (as occurred in some IoT devices and even earlier cloud services). Retrofitting basics like strong authentication or encryption after deployment can be like changing the engines mid-flight.

Instead, build those controls in early. In agile environments, this can be achieved by including security in “Definition of Done” for user stories – e.g., a story isn’t complete unless input validation and permission checks are in place for that feature. Teams can use checklists or OWASP Cheat Sheets to ensure common areas are covered at design and code time.

Tooling again is an ally here. GitHub Actions can enforce security steps in your CI/CD pipeline so that every build and release undergoes certain checks. For instance, you can have an Action workflow that runs a CodeQL static analysis (part of GitHub Advanced Security) on each pull request, flagging SQL injection, XSS, or other common issues before the code ever hits production. This effectively shifts a security review into the development phase – developers get quick feedback and can fix issues as they code, rather than weeks or months later during pen-tests. Similarly, dependency review (another GHAS feature) can warn you at PR time if a new library you added has known vulnerabilities, so you can choose a safer alternative early on.

Real-world data underscores the benefits. Microsoft famously instituted a Secure Development Lifecycle (SDL) in the mid-2000s after worms like Blaster ravaged Windows XP. The result was a significant drop in vulnerabilities in later Windows versions, proving that systematic secure design and coding practices produce more robust software. And in a 2024 study, Security Compass reported that organizations who implemented secure-by-design processes saw 79% fewer vulnerabilities in their applications. Fewer bugs early means fewer fire-drills later – and faster delivery too. As one Security Compass analysis noted, developers can lose 25-30% productivity when context-switching to fix late-stage security bugs. Shifting left not only prevents breaches, it keeps development flowing by reducing those surprise disruptions.

In summary, make security a first-class design goal. Use threat modeling, secure coding standards, and CI/CD enforcement to catch issues in the requirements and coding phase. The earlier a security flaw is found, the easier and cheaper it is to fix – and the less likely you’ll ever have to notify users or regulators about it.

Continuous Vigilance: Security Through the Software Lifecycle

Secure by Design doesn’t stop at deployment – it must be sustained “through life,” as the MoD calls out. Today’s software is living, evolving, and operating in hostile environments. Threats don’t stand still, and neither can your security. This means adopting a DevSecOps mindset of continuous monitoring, feedback, and improvement.

One aspect is continuous risk management and monitoring in operations. The MoD mandates continuous monitoring and assurance for long-lived military capabilities, and enterprises should do the same for their production systems. In practice, this includes instrumenting applications with logging and intrusion detection, collecting telemetry, and using systems like SIEMs or cloud security monitors to watch for suspicious activities. For example, if you have an e-commerce platform, you’d want alerts if an admin account starts performing abnormal bulk data exports, or if an unknown client is scraping your APIs at high rates. Feeding these insights back to the development team is gold: it can lead to new security requirements (e.g., “we need an alert on unusual admin behavior” becomes “we should build adaptive authentication or better admin audit trails in the next iteration”).

Another critical piece is maintenance and patching. Over a system’s lifetime, new vulnerabilities will be discovered – in your code, in libraries, in underlying platforms. Teams must have a process to promptly address them. This is where having automated tests and CI/CD pays off: you can ship security patches quickly with confidence. Dependabot, again, helps by continuously scanning for new dependency issues even after your code is released. Similarly, keeping container base images or server OS packages updated is important; many organizations now use automation to rebuild and redeploy containers when a critical CVE in, say, OpenSSL or glibc is announced. A recent example is the Log4j “Log4Shell” incident (late 2021) – organizations that had mature DevSecOps pipelines patched or mitigated that issue within days, while others struggled for weeks. In 2025, there’s no excuse for running software with known critical vulnerabilities for long. If Equifax taught us the cost of not patching, incidents like Log4Shell taught us the value of agility: some reports indicated that companies with automated dependency management remediated Log4j on thousands of servers in hours – a task that would’ve been Herculean manually.

Secure-by-design thinking also accounts for the human factor over time. People who built a system move on; new engineers join who don’t know the history. The rationale for security decisions can get lost, leading to “security decay” where a well-designed system becomes insecure due to complacency or lack of understanding. The MoD notes that design assumptions may be forgotten as time passes and new generations of engineers take over. To counter this, document your threat models, security requirements, and major design justifications. Treat these as living documents that are updated as the system evolves. Many firms embed this into an internal wiki or ADR (Architecture Decision Record) system. That way, when someone proposes a change (e.g., “let’s remove this validation to make integration easier”), the team can consult past decisions (“we put that validation because of X threat”) and avoid inadvertently undermining security. Some organizations even require a security impact section in change proposals. This knowledge continuity is akin to the MoD’s call to sustain Secure by Design research and practice as long as the capability exists.

Interoperability is another consideration. The MoD talks about coalition partners and how differing security postures must still work together. In industry, think of this like ensuring your security works when integrating with partners or acquired companies. Sticking to widely accepted standards (OAuth2, SAML, TLS configurations, etc.) and zero-trust principles can help maintain security when systems interconnect. It’s an area often overlooked until integration happens – so, secure by design also means designing for integration securely (e.g., you might build an API with the assumption it could be called from a partner’s system one day, thus you enforce proper auth and throttling from day one).

To maintain continuous vigilance, make use of your pipelines and production tooling:

Set up scheduled security scans (dynamic analysis, dependency checks) using CI (e.g., a weekly GitHub Action that runs a DAST tool or secret scanning on the repo).

Use secret scanning on all branches to catch secrets that might slip in at any point, not just on merge.

Leverage infrastructure as code scanning (tools like Checkov or Terraform linting) if your app is cloud-deployed, so your cloud configs stay hardened.

Implement runtime security measures like OSQuery, cloud-config audits, or container security policies to catch misconfigurations in live environments.

Regularly review and rotate secrets and keys; one good practice is to automate key rotation and expiry so that even if something leaked, it won’t be useful for long.

Conduct periodic security chaos engineering or drills – simulate an incident to test your monitoring and response, and feed the results back into improving design (for example, a drill might reveal that your alerting didn’t catch a certain exploit, prompting you to add a new detection mechanism).

The mindset should be that security is never “done.” It’s an ongoing process of learning and adapting. The threat landscape in 2025 includes ransomware gangs, supply chain attacks, and adversaries using AI to find bugs. By continuously integrating updates and monitoring, you drastically reduce the window of opportunity for attackers. As the MoD states, the “window of exposure” must be minimized. If you address issues continuously, you shorten that window and maybe even stay a step ahead. Teams that do this treat security defects like any other bug – to be fixed in the next sprint or sooner – rather than something to postpone.

AI: A Powerful Ally – and Potential Pitfall – in Secure Development

No discussion of modern software development is complete without touching on AI. From code-generation assistants to AI-based testing, these technologies are transforming how we work. The MoD problem book itself hints at unevenly distributed knowledge, a gap AI might help fill by providing on-demand expertise, but also a challenge when not everyone understands AI’s implications. For developers, the rise of tools like GitHub Copilot and other AI coding assistants is exciting and productivity-boosting. However, to use these tools in a secure-by-design manner, we need to be mindful of their limitations.

AI coding assistants can help write code faster, but they do not inherently understand security or context. They learned from billions of lines of code – including insecure code. Research has shown that if prompted incautiously, Copilot might suggest code that is vulnerable. A 2021 study by NYU found about 40% of Copilot’s generated programs had exploitable vulnerabilities in the scenarios tested. And a 2024 report by Snyk noted that Copilot can even amplify existing security debt, if your codebase has insecure patterns, it may repeat them in its suggestions. What this means is that AI is not a replacement for secure coding practices or code review. It’s a helper, not an auto-pilot.

That said, when used wisely, AI can be a boon for security. It can reduce drudgery (writing boilerplate input sanitization code, for example) and free up human developers to focus on critical thinking and auditing. The key is to keep humans in the loop. If you use Copilot or ChatGPT to generate code, always review the suggestions through a security lens: Are we validating inputs? Could this query be susceptible to SQL injection? Does this code follow our org’s secure coding guidelines? Treat AI suggestions as you would a junior developer’s contribution – helpful, but needing scrutiny. Many teams are already doing this by pairing Copilot with automated checks: e.g., use Copilot to speed up development, but also run a static analysis (SAST) tool like GitHub Code Security on the generated code to catch any glaring issues. This aligns with the industry’s advice to put guardrails around AI-generated code (manual reviews, SAST in CI, and developer training on secure patterns).

Encouragingly, AI tools are improving. GitHub has introduced a “vulnerability filtering system” for Copilot that attempts to block common insecure code patterns (like hard-coded secrets or SQL injections). Early versions of Copilot might gladly suggest using exec() on user input if prompted poorly; newer versions aim to curb such risky suggestions. This is a welcome evolution, but it’s not foolproof. Developers should update their Copilot settings to enable these security filters and stay updated on the tool’s enhancements. In the future, we can expect AI to play a bigger role in security – perhaps AI that can suggest fixes for vulnerabilities or identify risky code during code review. In fact, GitHub’s vision delivers AI-assisted security scanning and remediation as part of the developer experience.

Beyond code generation, AI can aid secure design in other ways. AI-based dependency analysis might predict which libs are likely to become vulnerable. AI ops tools can detect anomalies in system behavior faster than humans. Even threat modeling may benefit, with AI tools that suggest potential threats for a given architecture. As of 2025, many of these are emerging, but developers and security engineers should keep an eye on them as force-multipliers.

The bottom line: Embrace AI tools to enhance productivity, but do so with eyes open. Use them alongside robust security practices, not in place of them. When Copilot suggests a chunk of code, you as the developer remain the ultimate gatekeeper of quality and security. By guiding AI with secure prompts (e.g., comments that ask for safe implementations) and thoroughly testing its outputs, you can harness its power while mitigating risks. In a sense, making AI part of a secure-by-design workflow is itself a new skill for the “one team” to learn.

Conclusion: Secure by Design, in Every Line of Code

The UK MoD’s Secure by Design guidance may come from a defense context, but its core message resonates universally: security is not an add-on; it’s a fundamental design principle. For enterprise and open-source developers, this means weaving security into every phase of the software lifecycle – from initial idea, to commit, to CI pipeline, to deployment and beyond. We’ve explored how cultivating a security-minded culture (“one team”), sharing knowledge and managing supply chain risks, shifting left in development, and staying vigilant through continuous operations all play a role in building safer software. We’ve also seen that we’re not alone in this journey: modern platforms like GitHub provide powerful features (Advanced Security, Dependabot, Codespaces, Copilot, and more) to assist us in implementing these practices at scale.

Importantly, being “secure by design” doesn’t mean innovation slows or that we become risk-averse to the point of paralysis. On the contrary, when done right, secure-by-design practices enable faster innovation. If we integrate security from the start, we encounter fewer costly surprises later – freeing us to focus on new features and improvements with confidence. It’s inspiring to note data points like the 79% reduction in vulnerabilities for organizations that embraced these practices. Each critical bug prevented is an outage avoided, a breach averted, a customer’s trust preserved.

As developers and technical leaders, we should take these lessons to heart. The stakes – whether national security or protecting users’ personal data – are simply too high for us to do otherwise. The next time you kick off a project or even a small feature, ask: How will this be secure by design? That question might lead you to involve a security colleague in a design review, to choose a safer default, or to add an item to your sprint for writing a threat model. Over time, those small choices accumulate into a robust security posture.

In the ever-evolving tech landscape, new challenges (and new regulations) will continue to emerge. By building on the solid foundation of secure-by-design principles, developers can meet these challenges head-on. Let’s learn from the MoD and industry experiences – and build software that we can be proud of for its resilience. Security, after all, is a journey, not a destination. And every push to the repo is an opportunity to do things a little more securely than the last.

Get your handy cheat sheet summarizing the key secure-by-design practices from this post. Feel free to print it, share it, or pin it by your desk as a daily reminder! 😃