Gone in 60 Seconds: The Secret Rotation Illusion Meets Reality

Introduction – Spilled Secrets and Instant Mayhem

Chief Information Security Officers (CISOs) and code-wrangling developers alike, take note: your leaked credentials are not enjoying a quiet retirement on some forgotten server. In fact, the moment an API token or database password slips into the public domain, it’s basically a starter pistol for cyber miscreants. They will grab your “secret” and run, often in mere seconds. We’ve long treated secret rotation (regularly changing keys/tokens) as a comforting security blanket, but startling new research shows this may be nothing more than a security illusion.

Imagine leaving the keys to your kingdom under the doormat, only this doormat is the internet. Credential leaks are happening at a pandemic scale, tens of millions each year, and attackers don’t even need to break a sweat to abuse them. Infact, 70% of leaked secrets remain active two years later! This article dives into how quickly leaked secrets get exploited, the frightening odds of abuse, common attack vectors from recent breaches, and what dastardly deeds attackers perform once they’re in. Buckle up, because your leaked keys go from your code repository to “pwned” faster than you can say “rotate password.”

Secrets Leaked: Gone in 60 Seconds (or Less)

You’ve heard the proverb “a secret is no longer a secret once it’s shared.” In cybersecurity, once a credential is exposed, it’s effectively public property, and the criminal bots are very interested. How fast can things go wrong? According to multiple experiments and real-world traps, extremely fast:

Under a minute: In Clutch Security’s “Secret Rotation Illusion” experiment, the quickest exploitation of a leaked credential occurred in under 40 seconds. That was a juicy AWS key stashed in a public Docker container image, and some lurking bot snagged it almost instantly. Another token dropped on GitHub was abused in about one minute, as attackers forked the repo and started poking around confidential Terraform state files (yes, they even comb through those).

A matter of seconds to minutes: Security consultancy Cybenari planted AWS canary keys across various platforms and found that on highly watched sites like GitHub, attackers tried to use the keys within seconds of exposure. One access token showed first use at 127 seconds after going live, just over two minutes! It’s clear that automated scanners are continuously prowling popular code repos and will strike almost immediately when something tasty appears.

Nearly as fast as defenders: In an October 2023 honeypot study, Palo Alto Networks researchers intentionally leaked AWS IAM credentials on GitHub. GitHub’s own automated Secret Scanning detected the leak and alerted AWS to quarantine the key in about two minutes, pretty quick by defender standards. The attackers, however, weren’t far behind: within five minutes of the leak, a malicious actor had also discovered the key and begun using it for reconnaissance. It became a literal race between super-fast cats (attackers) and super-fast mice (defenders).

Hours to days in obscure corners: Lest we think everything is instant, Cybenari’s research did note that less-trafficked corners of the web take longer. An AWS key hidden on a personal website took about 50 hours (~2 days) before scrapers found it. And interestingly, an image with credentials on Docker Hub didn’t see an attack for 7 days in one test. In short, if you leak a key on a platform nobody watches, it might survive a leisurely weekend – but don’t count on it lasting much longer.

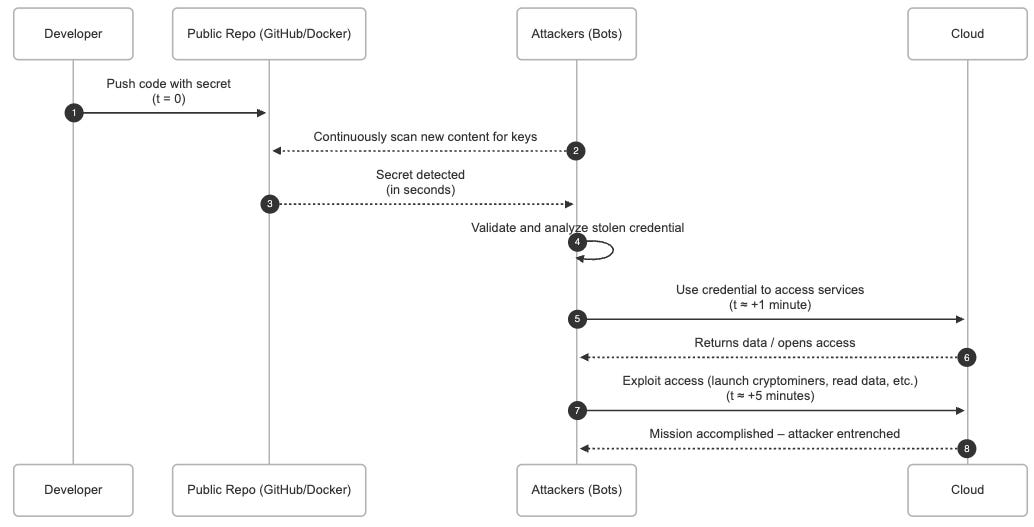

To visualize just how rapid the exploit timeline can be on a popular platform, consider the sequence below. A developer accidentally commits a secret, and within minutes automated bots have found it, attackers have begun abusing cloud resources, and your environment is under siege:

As the timeline shows, an exposed secret leads to trouble almost immediately. By the time your team notices the leak (if ever), attackers have likely already logged in with your credentials and made themselves at home. In one case, researchers even got a polite heads-up from a Reddit user who spotted their leaked keys in a post – a rare act of kindness in a landscape where automated thieves usually get there first.

The takeaway? If you’re still thinking “well, we rotate our keys every day, so a brief exposure is no big deal,” it’s time to wake up. Secrets are getting compromised in seconds, well before most rotation policies or human responders can react.

What Are the Odds? (Psst – Pretty Darn High)

We know leaks are found quickly, but what’s the likelihood that a leaked token actually gets abused? In a word: inevitable. Multiple industry studies and honey-pot experiments indicate that if a secret is exposed in a public or highly scanned space, the chance of an attacker grabbing it approaches 100%:

Clutch’s findings: Over the course of their Secret Rotation Illusion experiment, Clutch leaked various kinds of credentials across different platforms. The result? A significant chunk were exploited. For example, 59% of leaked AWS access keys were confirmed compromised by unauthorized use. Even “obscure” credentials like a database login weren’t safe, the team saw about 67% of leaked database secrets (e.g. AWS RDS credentials) get pounced on. One particularly high-value secret type hit 100% – every instance leaked was used by an attacker. In real numbers, that’s basically a guarantee: leak it, lose it.

Other research metrics: A SANS Institute intern ran a similar test with AWS canary keys on both a personal website and GitHub. “All the Canary Tokens I created were used,” he reported. The website-planted key took a few days, but every single token eventually got hijacked. On GitHub, it happened within minutes and so frequently that the researcher’s inbox flooded with alert emails until he gave up and turned them off. The intent of some access attempts may have been benign (researchers or security firms scanning), but you can bet many were malicious. As the author dryly noted: “if you post your AWS credentials publicly, someone will use them,” be it a helpful white hat or a bad guy with a crypto miner.

Secrets left hanging = attacks waiting to happen: It’s not just short-term threats; long-term exposure is a ticking time bomb. Astonishingly, about 70% of secrets leaked in 2022 were still valid (active) in 2024. That means organizations often fail to rotate or revoke leaked creds, leaving them dangling out there. With such a large portion remaining usable, the probability that someone stumbles on them over time climbs ever closer to certainty. As GitGuardian’s CEO put it, attackers don’t need a zero-day exploit when an exposed password gives unrestricted access to critical systems. Even a single leaked API key can lead to a catastrophic breach – for instance, one BeyondTrust API key leak let attackers infiltrate the U.S. Treasury Department’s network in 2023. No fancy malware, no nation-state spycraft – just one leaked key doing all the work.

In short, if your credential is exposed on a public GitHub repo, assume it will be compromised. The odds are heavily not in your favor. Whether it’s a 50% chance or virtually 100% may depend on the type of secret and where it leaked, but the safe assumption is: leak = loss. It’s a Las Vegas slot machine where every pull is a jackpot for the attacker, and you’re the one paying out.

And don’t be overly reassured by “we have monitoring” or “it was only public for an hour.” As we’ve seen, an hour might be 59 minutes too long. Even big tech players have learned this the hard way. Microsoft, Google, Meta, and others had over 1,500 of their internal tokens exposed on the Hugging Face platform recently. Those tokens granted full access to hundreds of organizations’ private ML datasets and models. If researchers hadn’t caught them, attackers could have wreaked havoc on AI projects for millions of users. The probability of abuse was sky-high given the value of those keys – and indeed, nobody is sure whether some were quietly abused before being shut off.

Leak Vectors and Cautionary Tales from the Real World

So how do these secrets slip out in the first place? Unfortunately, developers and IT staff have a knack for accidentally turning sensitive credentials into low-hanging fruit. Here are some of the most common leak vectors, illustrated by recent high-profile breaches:

Public code repositories (GitHub and friends): The canonical example of a modern credential leak is a dev who unintentionally commits an API key or password into code that gets pushed to a public repo. It’s easier done than said, a leftover token in a config file, a hard-coded DB password in a script, and suddenly your private credential is visible to the world. The 2024 Python Software Foundation leak provides a clear lesson: a GitHub Personal Access Token with admin privileges was accidentally embedded in a publicly available Docker container image. This token granted extensive access to Python's critical repositories and infrastructure, including PyPI. Although swiftly revoked upon discovery, the incident highlights how easily sensitive tokens can slip into public artifacts, underscoring the crucial need for proactive secret management and vigilant scanning practices. However, it’s not just accidental public repos – even private repos can be infiltrated, as the Python Software Foundation learned. Another example: Toyota had credentials tucked in code that was unknowingly uploaded to a public GitHub repo. That repo sat exposed for five years (2017–2022) before anyone noticed. It contained a key to Toyota’s T-Connect cloud, which held data on 290,000 customers. By the time Toyota invalidated the key, it had been public for half a decade – plenty of time for multiple malicious actors to have quietly used it. (Translation: assume the worst, because you’ll likely never know who dipped into that data in those five years.)

Package registries and cloud storage: Code isn’t the only place secrets hide. Sometimes credentials slip into container images (as we saw in Clutch’s DockerHub test), or into packages published on npm/PyPI, or even in public cloud storage buckets. Developers may publish a Docker container with an embedded API secret in an environment variable, for instance. Attackers do comb through Docker Hub images, where thousands of AWS keys are lurking in layers. There have been cases where attackers specifically search public S3 buckets or Google Storage for config files containing keys. If it’s out there and remotely accessible, it will eventually be found – whether by a benevolent scanner or a bad actor.

Paste bins and forums: Posting a snippet of code or log to a forum like Stack Overflow, Pastebin, or a gist? Better double-check it doesn’t include a credential. A surprising number of leaks happen this way – a developer trying to get help unknowingly pastes an API key along with an error message. Attackers have bots parsing popular paste sites for API-like patterns. One Cybenari canary token posted on Pastebin (no password) started getting hits after a day or two. Public QA forums have similarly been sources of leaks that attackers quietly mine.

Third-party breaches exposing secrets: Even if you keep your keys out of public repos, what about your software suppliers and partners? There have been several supply-chain incidents where source code from big firms was stolen or leaked, containing API keys. For example, the Twitch 2021 breach dumped the platform’s entire source code online – including internal AWS keys and payouts info. Lapsus$ hackers in 2022 hit Samsung and Nvidia, spilling tons of internal data and hardcoded secrets. Likewise, the recent Okta source code repo breach (Dec 2022) and others show that your secrets can leak via someone else’s compromise. Once those secrets are out, attackers will try them.

DevOps tools and CI/CD misconfigurations: Credentials often live in CI/CD pipelines, config files, or vaults. Misconfigure a repository or leave a Jenkins console publicly reachable, and those secrets can leak. Toyota’s breach in 2023 was described as a “software supply chain” issue – essentially a contractor posted sensitive code externally. In another case, an attacker found admin credentials in a Jenkins server and from there pivoted through an entire network. Even collaboration tools like Slack and Jira can harbor credentials: one study found 6% of Jira tickets contain passwords or keys and a few percent of Slack channels have leaked secrets embedded.

Real-world consequences of these leaks are stark. Hugging Face, the AI model hub, learned in late 2023 that an attacker gained unauthorized access to secrets stored in their hosted “Spaces” projects. Over 1,600 API tokens were exposed, including those belonging to big-name AI orgs. Had the attackers fully exploited them, they could have stolen or poisoned popular machine learning models affecting millions. In other words, an API token leak isn’t just about a bit of compute – it can undermine trust in code and data at a massive scale.

The pattern across The Python Software Foundation, Toyota, Hugging Face, and others is depressingly similar: a credential that should have stayed private was unintentionally made public (or accessible to someone who shouldn’t have it), and attackers either immediately or eventually took advantage. Whether it’s through GitHub, an open bucket, or a third-party breach, leaked secrets are the common thread in many major data breaches. Verizon’s data breach report routinely shows that a huge percentage of breaches involve stolen or misused credentials. It’s often the easiest way in – why pick a lock when someone kindly left you the keys?

Behind the Curtain: What Attackers Do Once They Have Your Key

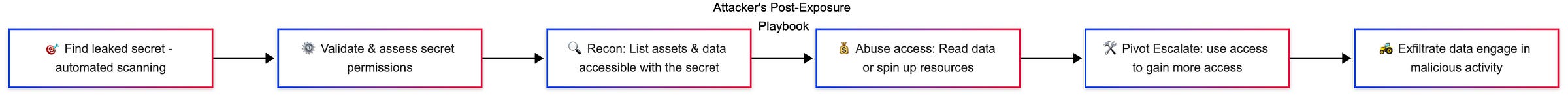

Now for the really sobering part: once an attacker pockets your leaked secret, what do they actually do with it? The short answer: whatever the key allows them to, and fast. The long answer, based on observed behavior:

Automation at scale: The first thing to understand is most of these leaks are scooped up by bots. Attackers run automated scrapers that continuously scan code repositories, forums, Docker images, etc., for anything resembling credentials. GitHub and Docker Hub are among the most frequently scanned, given their rich yield. These bots operate 24/7, and they don’t stop at one platform. The moment a new commit or post appears, they parse it. So the “attacker” initial action is often just a script saying “Oh look, AKIA... (an AWS key) – let’s test that immediately.” In the SANS canary test, the user-agent revealed someone’s Python script using the Boto3 AWS library on a Linux environment to test keys. It’s not a human carefully typing commands – it’s scripted and lightning fast.

Immediate validation and use: Once a bot finds a secret, it will typically verify it and start using it within moments. For cloud API keys (AWS, Azure, GCP), the first steps are usually to call cloud APIs and see what’s accessible. In the Palo Alto “EleKtra-Leak” scenario, the attacker who found the AWS key “immediately [started] performing reconnaissance” on the account. This means listing S3 buckets, EC2 instances, IAM roles – basically mapping out what they just unlocked. If it’s an database connection string, the attacker might try to connect to the database and see what tables or data they can get. If it’s an OAuth token or service API key, they might query user data or account info through that service’s API.

Cryptojacking and resource hijacking: One very common next step, especially with cloud infrastructure keys, is to monetize access by deploying crypto-mining. Why bother exfiltrating data if you can simply spin up a few hefty EC2 instances and mine cryptocurrency on someone else’s dime? Indeed, the Palo Alto researchers observed the attacker using the stolen AWS creds to launch a new EC2 instance within minutes to start mining. Unit 42 dubbed that attacker crew the “EleKtra-Leak” group – specialists in quick-turnaround cryptojacking. This tactic has been seen widely: AWS, Google Cloud, or Azure keys get leaked, and almost immediately hundreds of GPU-loaded instances appear, chewing up CPU/GPU to generate crypto until the victim notices the outrageous cloud bill. It’s low-hanging fruit for criminals – pure profit and usually before the victim’s fraud controls kick in.

Data access and theft: If the credentials allow access to data, you can bet attackers will grab as much as they can. For example, when a Toyota key was exposed, an intruder could have used it to access Toyota’s confidential customer data stored in their T-Connect Cloud. In other cases, an exposed database password might let the hacker dump an entire customer records table. If an API token grants access to, say, a GitHub account or a SaaS service, the attacker might clone all the code repositories or scrape sensitive documents. One frightening note from Clutch’s research: many platforms don’t even log these read-access events. Services like GitHub, Okta, Twilio, even OpenAI’s API, often lack robust logging for token-based access (especially non-admin actions). This means an attacker using a stolen API key to quietly download data may leave no trace at all. They could siphon off your data “quietly” and you’d be none the wiser until much later. Clutch highlighted that a malicious actor could, for instance, use a leaked OpenAI API key to retrieve conversation data or fine-tuned models without triggering any obvious alarms. Talk about silently raiding the cookie jar.

Pivoting and lateral movement: Skilled attackers won’t stop at the first system. Leaked secrets are often just a foothold. From there, they try to pivot deeper into the infrastructure. Clutch’s team observed attackers using compromised AWS keys to escalate privileges – for example, using the key’s access to discover other credentials, create new temporary keys, or move into connected systems. If the initial secret is a cloud credential with broad permissions, an attacker might create backdoor users, open new network paths, or retrieve secrets from a secrets manager. If the secret is for one service (say, a CI/CD system), they might look inside that system for configuration files that contain credentials for yet another system (a common chain: leak a CI token -> use it to read CI pipeline logs -> find another secret within those logs -> use that to breach a database). In essence, the first leaked key is the key to many other kingdoms, if played right. The attack isn’t a one-and-done; it’s a beachhead from which they expand their control.

Global and organized operations: These aren’t random script-kiddies working out of mom’s basement on weekends. The exploit activity shows signs of organized, global campaigns. Clutch’s traps caught attacker traffic from all over: the US, Canada, Mexico, India, and notably a lot from China (one of the most active sources). The diversity of IPs and techniques suggests multiple groups constantly on the hunt. Some groups have particular interests – Clutch saw attackers even trying out AI-related accesses like invoking machine-learning model endpoints on AWS (perhaps hunting for valuable AI compute or data). When one researcher intentionally left a key on a site, one of the first “attackers” turned out to be a security company’s scanner. In another case, it was a bad actor using a ProtonVPN exit node (to mask their identity) and a known Python script. The point is, there’s an entire ecosystem – from legitimate researchers to criminal gangs – constantly looking for leaked secrets. And the criminals are coordinating automation and sharing techniques on forums (there are dark web sites offering “freshly spilled creds” for sale, and guides on how to monetize certain types of keys).

Exfiltration and cleanup: If data can be stolen, it will be. Personal data has resale value, proprietary code might be sold or leaked to embarrass a company, and sensitive emails or messages can be used for extortion. Attackers will often exfiltrate everything they can quickly (before a key gets revoked). For example, if a Slack bot token is leaked, an attacker might pull all channel history or private conversations and then quietly inject a malicious bot to maintain access. If a database credential is leaked, they’ll dump the database. In many cloud breaches, attackers download extensive data from S3 buckets once they log in (like source code, user records, etc.). After grabbing goodies, some attackers attempt to cover tracks – but interestingly, many don’t bother too much in these “smash-and-grab” secret thefts. Why? Because as noted, often the services aren’t logging reads well, or the account gets burned anyway once discovered. The attacker might simply move on to the next stolen key. It’s a volume business.

Let’s summarize an attacker’s typical playbook after finding a leaked secret, in a simple flow:

Every step above has been witnessed in real incidents. Upon finding a key, attackers waste no time to use it for profit or deeper intrusion. If they can quietly maintain access (for example, by creating a new admin user with an exposed cloud key), they will, but often the window is short. After all, some defenders do catch on quickly (GitHub’s own scanners or proactive monitoring by the victim, in the best case). So attackers often operate in a “smash-and-grab” mode: get in, do as much as possible as fast as possible, and accept that the key might get killed soon. In Clutch’s research, even when they rotated keys every hour and re-leaked them, attackers simply re-compromised the new keys just as, indicating these folks are watching in real-time and ready to pounce again and again. It’s practically an assembly line of cybercrime.

Conclusion – No More Security Theater: Time to Get Serious

The evidence is overwhelming and a bit terrifying: leaked credentials are among the easiest targets for attackers, and they will be exploited almost immediately in most cases. The old notion that you can rotate secrets periodically and be safe belongs in the same bin as dusty 90-day password change policies. When attackers can compromise a key in 60 seconds, a rotation in 24 hours or even 1 hour isn’t comfort – it’s an ill. As one report quipped, secret rotation in these scenarios is like “locking the barn door after the horse has bolt.”

So what’s a security-conscious org to do? First, acknowledge the harsh reality: your credentials are probably going to leak at some point (through human error, supply chain, or otherwise), and when they do, you have seconds before the bad guys are using them. That means we need to shift our mindset from relying on after-the-fact rotation to preventing leaks and limiting the damage if one occurs:

Shift to ephemeral secrets and zero trust: Use short-lived, single-use credentials wherever possible. If an API key expires in 5 minutes, an attacker has a much smaller window to do damage. Embrace a Zero Trust approach where every access is verified continuously, not just granted because a key is provided. For example, AWS now offers temporary access tokens with IAM Roles – prefer those over long-lived static keys. Likewise, tools that generate on-demand credentials for databases, etc., can cut down the risk.

Secret scanning and prevention: Enable protections like GitHub’s push-time secret scanning to block commits containing known credential patterns. Many cloud providers (AWS, Azure, GCP) integrate with GitHub to auto-revoke or alert on leaked keys – use those features. Internally, deploy scanners (GitHub Secret Protection, TruffleHog, etc.) in your CI pipeline to catch secrets before they make it into production. This is your first line of defense against oopsies. GitHub evan has a free Security Risk Assesment to tell you how good or bad things might be.

Monitoring and instant response: Implement real-time alerting for any use of credentials that looks suspicious – e.g., an API key being used from an unusual IP or a sudden burst of actions. Given how fast exploitation happens, you want automated systems (or managed services) to disable keys within seconds if possible. Some organizations script “canary” credentials in their code: if that key ever gets used, you know a leak happened and can react immediately.

Thorough audit logging: Pressure your SaaS providers to improve logging of credential usage. If a token is used to read a bunch of data, you need to know. Many services are improving this, but as of now, blind spots. Fill what gaps you can on your side – e.g., log every call to your cloud that comes from tokens, even read-only ones, and analyze for anomalies.

Defense in depth: Assume some secrets will slip. Use principles of least privilege – a leaked API key that only accesses one benign service is far better than a god-mode key. Network segmentation, rate limiting (so an attacker can’t, say, list all your S3 buckets in one go), and anomaly detection can all slow down or contain an attacker who got in with a leaked key. The slower they are, the better chance you have to intercept them.

Education and process: Finally, drill it into developer teams that hardcoding secrets is the enemy. Provide easy-to-use secret management tools so devs aren’t tempted to slap a password into code. Many leaks (Toyota’s, for example) happened because code meant to be private was accidentally made public. Foster a culture where any exposure, even in an internal chat, is treated seriously and cleaned up.

It’s time to stop playing secret Santa with your API keys. If you don’t tighten up secrets management, you’re basically inviting attackers to a buffet. The speed and certainty with which leaked credentials get exploited should keep every CISO and engineering manager up at night. The good news is we’re becoming more aware – research like the “Secret Rotation Illusion” has busted the myth that rotating keys is enough, and companies are beginning to adopt more robust stratergy.

The next time someone accidentally posts a password on GitHub, remember: the clock starts ticking the second it hits the 'Submit' button. It’s a high-stakes game of capture the flag now, and the attackers are extremely good at this game. Protect your secrets like your business depends on it – because it does. After all, you can’t leak what you don’t have (or only have ephemerally), and an attacker can’t exploit what they never get a chance to see. Time to turn off the illusion and implement some real security, before another leaked key comes back to bite you – likely in 60 seconds or less.