GitHub's Engineering System Success Playbook (ESSP) Cheat Sheet

Key Takeaways for Engineering Leaders

As an engineering leader, you’ve probably got a million things pulling at your attention; deadlines, deployments, and now, navigating the fuzzy promise of generative AI. GitHub’s Engineering System Success Playbook (ESSP) offers clarity amid the chaos, blending best practices from SPACE, DORA, and DevEx into a single, practical approach to achieving quality, speed, and developer happiness. This cheat sheet distills the playbook into straightforward, actionable insights—perfect for when you need to quickly gauge your team’s health, decide what to prioritize next, or ensure those exciting new AI tools genuinely boost your business outcomes.

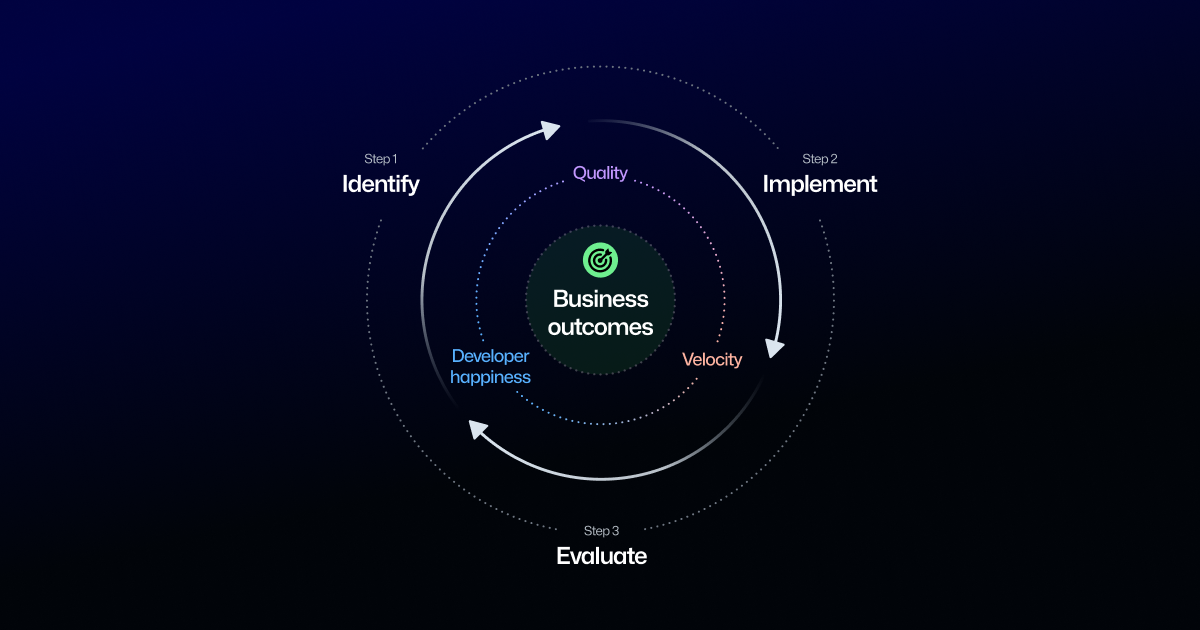

Focus Areas (Engineering “Zones”): GitHub’s ESSP breaks down engineering performance into four zones – Quality, Velocity, Developer Happiness, and Business Outcomes. Think of business outcomes (e.g. customer satisfaction, revenue impact) as sitting on top of a foundation of quality, speed, and developer well-being. Strengthening these foundational zones in unison creates the conditions for better business results.

Integrated Frameworks: The playbook combines best practices from SPACE, DORA, and DevEx research. This means it uses a mix of metrics addressing satisfaction & well-being, engineering flow efficiency, throughput, reliability, and more – giving a holistic view of your engineering system. You’re not tied to one methodology; ESSP blends them into a practical toolkit.

12 Key Metrics (Examples): ESSP recommends 12 measurable metrics (3 per zone) so you can quantify progress. Examples: Lead Time (Velocity) – measures how quickly code goes from commit to production; Deployment Frequency (Velocity) – how often you ship value; Change Failure Rate (Quality) – % of releases that result in incidents; Failed Deployment Recovery Time (Quality) – how fast you restore service; Flow State Hours (Dev Happiness) – time developers spend in deep, focused work; Tooling Satisfaction (Dev Happiness) – developer-rated happiness with their tools; AI Leverage (Biz Outcomes) – percentage of potential productivity gain realized through AI adoption; Engineering Cost Ratio (Biz Outcomes) – engineering spend as a % of revenue. Tip: Use these as starting points – you can adapt metrics to fit your tech stack and definitions, but ensure you cover each zone.

Three-Step Improvement Process: Use ESSP’s step-by-step approach to drive changes:

Identify Pain Points and Barriers – Gather data and feedback. Establish your baseline metrics for each zone. Example: Is lead time lagging? Are developers complaining about code review wait times? Conduct surveys or focus groups to pinpoint friction.

Evaluate and Prioritize – Determine what needs to change to achieve your goals. Engage stakeholders to clarify which zones matter most right now (e.g. Quality issues affecting customers vs. Velocity for time-to-market). Brainstorm interventions for each problem barrier (e.g. invest in CI/CD to improve deployment frequency, or adopt Copilot to assist developers). Prioritize initiatives that will have the biggest impact on your business outcomes.

Implement, Monitor, Adjust – Execute the changes and closely track the effect on your metrics. Use leading indicators (like commit rate, PR feedback cycle time) for early signs of improvement, and watch lagging indicators (like failure rate, deployment time) for true outcomes. If a metric isn’t moving in the desired direction, investigate and adjust your approach. This is an iterative cycle – continuous improvement is key.

Guiding Principles for Application:

• Systems Thinking: Treat your engineering process as a system of interconnected parts. Avoid tunnel vision on a single metric – improvements should elevate the whole system, not sabotage another part. (Example: Don’t celebrate faster deploys if quality crashes as a result.) Always consider how changes in one area might affect others downstream.

• Team Involvement: Involve engineers early and often. Improvements are more successful when developers co-create the solutions and feel ownership. This could mean forming working groups to analyze data or pilot new tools.

• Right Metrics, Right Use: Be intentional in selecting metrics. Ensure everyone understands what each metric represents and avoid metric gamification (metrics are indicators, not targets in isolation). Focus on trends over time rather than single data points or shallow comparisons to industry benchmarks. Use paired metrics to get context – e.g. track change failure rate alongside deployment speed to ensure balance.

• Measure Cost vs Benefit: Consider the effort required to gather a metric versus the insight it provides. Start with easily accessible data (even if it’s surveys or manual data) to get started. You can evolve to more advanced telemetry as you grow. The goal is actionable insight, not analysis paralysis.

• Continuous Improvement Culture: Frame the ESSP initiative as a long-term journey. Celebrate improvements, learn from regressions, and iterate. Encourage a blameless culture where metrics are used to learn and improve the system, not to punish individuals. Over time, this builds a culture of excellence where every engineer understands how their work contributes to business success.

Using this cheat sheet, engineering leaders can quickly recall the core of the ESSP: focus on the right things (quality, velocity, happiness) with the right metrics, follow a disciplined improvement loop, and create an environment where data-informed decisions drive better outcomes. By applying these concepts, you’ll be equipped to turn ambitious goals – like leveraging AI for competitive advantage or boosting team productivity – into a concrete, trackable action plan.

Next step: Dive into the full playbook for detailed guidance and real examples tailored to put these ideas into action.