From Clever Chatbot to Problem-Solving Powerhouse: Unpacking OpenAI's o3 & o4 Magic

The Power of Interwoven Intelligence

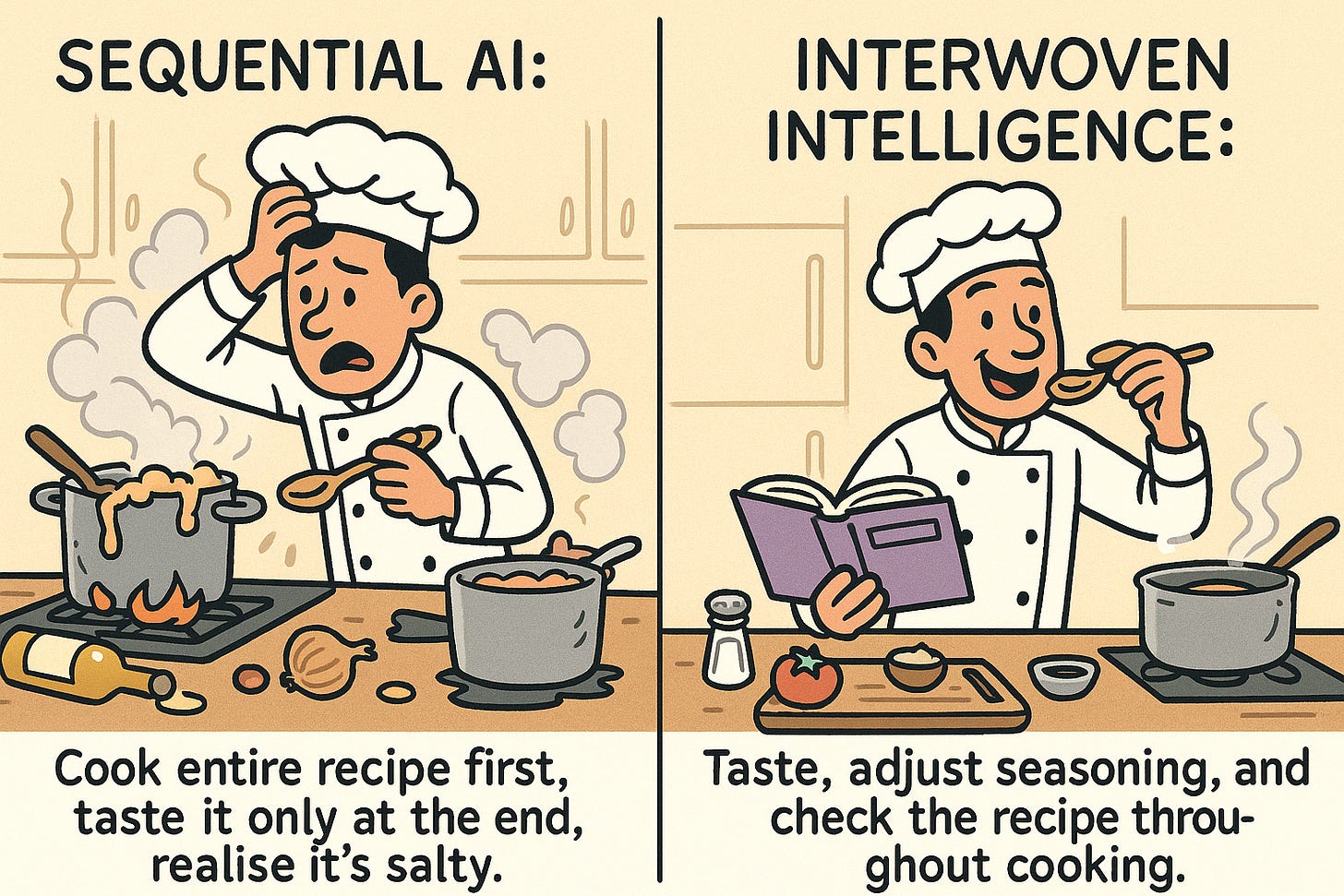

Ever felt like your favourite AI assistant, while brilliant at spinning words and answering questions, could do with having a good toolkit? Like a meticulous chef who suddenly gets access to every gadget imaginable? Well, that's precisely the upgrade OpenAI's latest o3 and o4-mini models represent. They're not just reading and writing; they're thinking and doing, and it's all thanks to a clever new approach that integrates tools right into their core reasoning process, Interwoven Intelligence.

Think of it this way: traditional large language models (LLMs) are like incredibly knowledgeable people who can only access information stored in their own brains. They can reason based on what they know, but if they need up-to-date facts, to perform a calculation, or to analyse a document, they're a bit stuck.

Figure: Traditional LLMs reason based solely on their training data.

Now, imagine giving these brilliant minds the ability to use a search engine, a calculator, a code interpreter, even the power to look at images, all while they're in the middle of figuring things out. That's the essence of what o3 and o4-mini bring to the table.

Diving into the Reasoning Loop: Thinking in Circles (the Good Kind!)

The secret sauce behind this new superpower lies in what's being called an "agentic reasoning loop". Now, that might sound a bit sci-fi, but the core idea is quite intuitive. Imagine you're trying to solve a tricky problem. You might:

Think: Consider what you know and the steps involved.

Realise you need more info: Maybe you'd Google something, check a document, or ask a friend.

Act (use a tool): Perform that search, open the document, or send the text.

Incorporate the new information: Use what you've learned to refine your thinking.

Repeat steps 1-4 until you have a solution.

In a similar way, instead of a single pass from your question to an answer, these models can engage in a multi-step interaction cycle. They "think" for a bit (this is the familiar chain-of-thought we've heard about), then they might realise they need more information or need to perform a specific action. That's where the "tool use" comes in. They can autonomously decide to call upon a tool – say, a web browser to look up the latest news, or a Python interpreter to crunch some numbers. The result from the tool is then fed back into their "thinking" process, and they continue reasoning, iteratively, until they arrive at a comprehensive and often more accurate answer. This reasoning loop is known as “Think-Act-Reflect”.

Figure: Models that leverage Interwoven Intelligence can pause their reasoning to use tools and incorporate the results, potentially looping multiple times for complex tasks.

Bye-Bye Limitations: How Integrated Tools Change Everything

This fundamental shift in architecture and training makes o3 and o4-mini significantly better than previous approaches in several key ways:

Solving the "I Don't Know That" Problem: Remember when ChatGPT would politely admit it couldn't browse the web or analyse that PDF you uploaded without specific plugins? Those days are fading. These new models have built-in access to a suite of tools (like web browsing, code execution, file analysis, and even image analysis). So, when you ask a question that requires up-to-date information or involves processing a document, they can often just… do it. Imagine asking: "What's the latest on the new electric car tax incentives, and how do they compare to last year's (here's a link to the old policy)?" The model could browse for the latest info, analyse the linked document, and give you a comprehensive comparison – all in one go!

Tackling Complex Tasks Autonomously: Earlier systems often required you to break down complex tasks into multiple prompts or manually trigger plugins. o3 can handle multi-step problem-solving much more fluidly. Think of it like asking for a quarterly sales report with charts. A traditional LLM might give you a text summary (if you're lucky). But o3 could potentially query a database (if connected via a tool), perform calculations, create visualisations using a code interpreter, and present you with a ready-to-go report. This dramatically increases efficiency and reduces the need for you to be the project manager for your AI assistant.

Reasoning with More Than Just Words: The integration of tools extends to multimodal reasoning. They can now incorporate images (and potentially other formats in the future) directly into their thought process. Asked to identify the largest ship in a photo and predict its docking location? The model can analyse the image, decide it needs a closer look, use an image tool to zoom in, re-analyse, and then provide a more informed answer. It's "thinking with images," not just about them.

More Verifiable and Grounded Responses: Because they can actively seek out information or perform calculations, the responses from o3 and o4-mini are often more useful and verifiable. They can back up their answers with evidence fetched via tools, increasing trust and reducing the likelihood of pure "hallucinations" (making things up). Although, interestingly, due to their increased "thinking out loud," they can sometimes hallucinate more than older models on certain knowledge tasks, highlighting an ongoing area of development.

AI for the Everyday: Practical Examples You Can Relate To

Let's ditch the tech jargon and see how this new approach could impact your day-to-day:

Planning Your Trip: Instead of hopping between multiple websites, you could ask: "Find me the cheapest flights from London to Barcelona next month, book a hotel near the Sagrada Familia with good reviews, and suggest some vegetarian-friendly restaurants nearby." The AI could use browsing tools to find flights and hotels, read reviews, and even use a maps tool to locate restaurants – all within a single request.

Understanding Complex Documents: Got a dense contract or a lengthy research paper? You could ask: "Summarise the key terms of this contract (uploading the PDF) and flag any clauses that differ from standard industry practice (based on recent web searches)." The AI could use file analysis and web browsing tools to deliver a more insightful and comprehensive answer than a simple summary.

Answering Tricky Questions: Ever wondered about something obscure? Instead of endless Googling, you could ask: "What's the current estimated population of pangolins worldwide, and what are the main threats to their survival (cite your sources)?" The AI could perform multiple targeted web searches, analyse the information, and provide you with an answer, complete with (hopefully) links to the data it found.

The Evolution: From Sequential Steps to Interwoven Intelligence

Previously, even with systems that could use tools (like ChatGPT with plugins), the process often felt somewhat sequential. The AI would reason, decide to use a tool, get the result, and then formulate an answer. There wasn't much back-and-forth or iterative refinement within a single response.

Figure: Sequential reasoning used by older systems

o3, on the other hand, can weave multiple tool calls into its thought process. It might search the web, find a piece of information, then use a code interpreter to analyse that data, and then search again to corroborate its findings – all before presenting you with the final answer. It’s like watching a detective gather clues from multiple sources and piece them together in real-time.

Figure: Multi-step tool integration of o3/o4 (bottom), showcasing the increased flexibility and depth of the new approach.

Coding Supercharged: o3/o4 and the Future of Code Generation (and Debugging!)

Now, let's bring this back to the world of coding and consider GitHub Copilot. Copilot is fantastic at code completion, suggesting lines and blocks of code as you type. It's like having a coding partner who's incredibly familiar with syntax and common patterns.

However, imagine what happens when you need to tackle a more complex coding challenge, debug a tricky error, or integrate with a specific API. This is where the reasoning and tool-using capabilities of o3 and o4 could take code generation to a whole new level.

With these models, a developer could potentially:

Describe a complex feature in natural language, and the AI could not only generate the initial code but also use a search tool to find relevant API documentation, a code interpreter to test snippets, and even a file system tool to organise the generated files.

Paste in a block of code that's throwing errors, and the AI could use a code execution tool to run the code, identify the precise error, and then suggest and even test a fix – much like a developer would in their own environment. This goes beyond static analysis and allows for dynamic debugging driven by the AI itself.

Ask for code that interacts with a specific external service. If the developer provides the API documentation (perhaps via a file or a URL), o3 could potentially understand the API structure and generate code that correctly makes the necessary calls.

While Copilot excels at immediate, context-aware code completion, o3 and o4 have the potential to act as more autonomous coding assistants capable of handling more intricate tasks that require deeper reasoning, external information, and iterative problem-solving.

When to Unleash the o3/o4 Power (and When Cheaper Options Might Suffice)

This advanced capability naturally comes with potentially higher computational costs. So, when should a developer consider using these more powerful models versus existing, potentially cheaper alternatives?

Consider o3/o4-mini when:

The task is complex and requires multi-step reasoning or external information. If a simple, knowledge-based answer suffices, a cheaper model might do the trick.

You need verifiable answers or want the AI to work with external data (web, files, etc.). If accuracy and grounding are paramount, the tool-using capabilities are a major advantage.

You want to automate more complex workflows and reduce the need for manual orchestration. The autonomous nature of these models shines when handling multi-stage processes.

You're working on challenging coding or debugging tasks. The potential for intelligent code analysis and autonomous fixing can save significant development time.

You need to process and understand documents or other non-textual data. Their multimodal and file analysis capabilities open up new possibilities.

Cheaper models (including the current generation of Copilot for many use cases) might be perfectly adequate for:

The task is straightforward and can be answered from the model's internal knowledge. For simple content generation or basic question answering, the extra "brainpower" (and potential cost) might not be necessary.

Cost is a very significant constraint and the task's complexity doesn't justify the added expense. For high-volume, low-complexity applications, efficiency might outweigh advanced reasoning.

You have very specific and well-defined tasks where simpler models have been fine-tuned to perform well. In such cases, the targeted training of a cheaper model might be more effective and cost-efficient.

Mapping this back to utilization within GitHub Copilot, this table is a good overview of when to leverage cheaper models versus o3/o4 mini.

Final Thoughts: A Glimpse into the Future of Intelligent Assistance

Interwoven Intelligence models such as OpenAI's o3 and o4 models represent a fascinating evolution in the world of AI. By seamlessly integrating tools into their reasoning loops, they're moving beyond being mere language generators and are becoming true problem-solving partners.

For developers, this opens up exciting new possibilities for creating more intelligent and autonomous coding tools. While cost-effectiveness will always be a consideration, the potential gains in productivity and the ability to handle complex tasks make these new models a compelling prospect for the future of software development and beyond.

It's like our AI assistants have finally been given the keys to the workshop, and we can't wait to see what they'll build.

![mermaid-graph/default graph LR

A[User Input] --> B(Reasoning based on internal knowledge)

B --> C[Generate Output]](https://substackcdn.com/image/fetch/$s_!FZC6!,w_1456,c_limit,f_auto,q_auto:good,fl_progressive:steep/https%3A%2F%2Fsubstack-post-media.s3.amazonaws.com%2Fpublic%2Fimages%2Fdee28121-97ff-46b0-801b-502af3b077de_1486x140.png)

![mermaid-graph/default graph LR

A[Request] --> B(Think)

B --> C(Act)

C --> D{Reflect}

D -- Finished --> E[Response]

D -- More Context --> B](https://substackcdn.com/image/fetch/$s_!aKgH!,w_1456,c_limit,f_auto,q_auto:good,fl_progressive:steep/https%3A%2F%2Fsubstack-post-media.s3.amazonaws.com%2Fpublic%2Fimages%2F1218989f-5803-46c4-bb87-bb7aa51f4c0e_1575x265.png)

![mermaid-graph/default graph LR

A[User Query] --> B(Reasoning)

B --> C{Use Tool?}

C -- Yes --> D[ Tool]

C -- No --> E

D --> E(Generate Output)](https://substackcdn.com/image/fetch/$s_!dvw7!,w_1456,c_limit,f_auto,q_auto:good,fl_progressive:steep/https%3A%2F%2Fsubstack-post-media.s3.amazonaws.com%2Fpublic%2Fimages%2F0c6ed35b-8512-4dd5-9a0c-54ce2bb5d3ea_1600x266.png)

![mermaid-graph/default graph LR

A[User Query] --> B(Reasoning)

B --> C{Need Tool?}

C -- No --> Q[Generate Output]

C -- Yes --> D(Use Tool)

D -- Reason with Tool Result --> B](https://substackcdn.com/image/fetch/$s_!7n7E!,w_1456,c_limit,f_auto,q_auto:good,fl_progressive:steep/https%3A%2F%2Fsubstack-post-media.s3.amazonaws.com%2Fpublic%2Fimages%2F6765a1bf-17ce-44ad-a312-7611b78fc337_1556x434.png)